What is Artificial Intelligence?

Marvin Minsky, commonly known as the father of artificial intelligence (AI), defined it as:

“Artificial intelligence is the science of making machines do things that would require intelligence if done by men.”

Importantly, this doesn’t mean the machines have to be intelligent. A dumb machine running a clever piece of software, designed and written by humans, is classed as AI. Although digital technology has been an ordinary part of scientific and bureaucratic life since the 1950s, and everyday life since the 1980s, sophisticated marketing campaigns still have most people convinced that technology is something new and potentially revolutionary.

Are There Limits?

Yes certainly. The first thing to remember about the limits of AI is that it changes over time. The limits in the 1970s were different to today’s limits.

Secondly, there are two types of AI, Hollywood AI and Real AI. Hollywood AI is the kind that would power the robot butler, might theoretically become self-aware and take over the government, could result in a real-life Terminator and other such apocalyptic visions. Real AI is what we actually have today, it is purely mathematical. It’s less exciting than Hollywood AI, but it works surprisingly well, and we can do a variety of interesting things with it.

The important distinction is, Hollywood AI is what we want, what we hope for, and what we imagine (minus the Terminator). Real AI is what we have. It’s the difference between dreams and reality.

Positive Asymmetry Bias

Human nature has an in-built bias towards positive asymmetry (a tendency to emphasise only the most positive cases). So, when Elon Musk says that we will have fully driverless cars within two years (1) it is widely reported and (2) we believe him. When Waymo CEO (former Google self-driving car project), John Krafcik, describes autonomous vehicle technology as being “really, really hard.” and said in January 2019 that “fully autonomous cars that can drive in any conditions and on any road without human input will never exist” it is hardly reported.

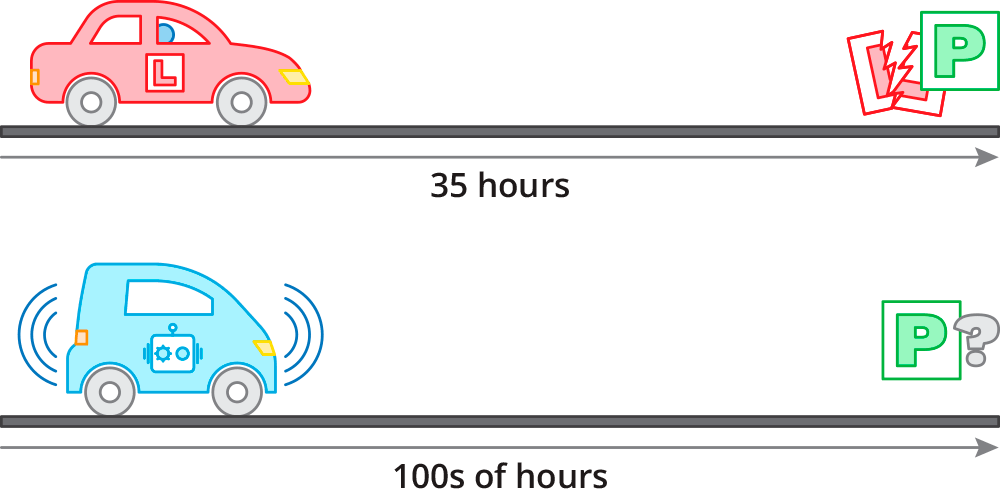

For me nothing quite brings home the limitations of current AI than the following: A human can learn to drive a car with an average of 35 hours of training, without crashing into anything. How many millions of hours have we spent training driverless cars? Have they surpassed human drivers? However you answer these questions, we don’t yet have an AI that is evolved enough that we can teach it to drive in 35 hours!

Ultimately, everything executed on a computer comes down to maths, and there are fundamental limits to what you can (and should) do with it. Real AI is mathematics, it’s a mathematical method for prediction. It can give you the most likely answer to any question that can be answered with a number, it involves quantitative prediction. Real AI is statistics on steroids.

Real AI works by analysing an existing dataset, identifying patterns and probabilities in that dataset, and codifying these patterns and probabilities into a computational construct called a model. The model is a kind of black box that we can feed data into and get an answer out of. We can take a model and run new data through it to get a numerical answer that predicts something.

Machine learning, deep learning, neural networks, and predictive analysis are some of the Real AI concepts that are currently popular. For every AI system that exists today, there is a logical, scientific, explanation for how it works.

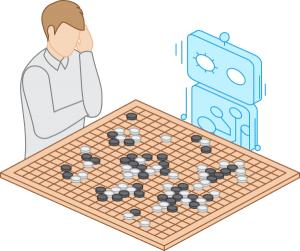

Ever since Alan Turing’s 1950 paper that proposed the Turing Test for machines that think, computer scientists have used chess as a marker for ‘intelligence’ in machines. Half a century has been spent trying to make a machine that could beat a human chess master. Finally, IBM’s Deep Blue defeated chess champion Gary Kasparov in 1997. AlphaGo, the program that won three of three games against Go world champion Ke Jie in 2017, is often cited by the media, as an example of a program that proves Hollywood AI is just a few years away in the future. Looking closely at the program and how it was created reveals a different story.

AlphaGo is a human designed and constructed program running on (dumb) hardware, just like any other computer program. Its developers designed an algorithm that would predict the optimal next move for any given game position. They started by gathering data, then they defined a language that recorded the moves in a Go game in a structured notation. This notation was used by Go players and researchers for years; ultimately the AlphaGo team used all the moves from thirty million games to train the algorithm. The dataset wasn’t randomly generated; those thirty million games were actual games played by actual people (and some computers). The programmers used the thirty million games to train the model they called AlphaGo. The developers programmed AlphaGo to use a method called Monte Carlo Search to pick a set of moves from the thirty million games that would have the highest probability of winning. Not ‘intelligent’ it simply uses brute force on a very large dataset to predict the next best move.

There are some important points here for AI limitations:

- The ‘intelligence’ is in the human algorithm design.

- The computer, the software (human created) ran on, is dumb.

- The software predicts the best next move from human created training data.

- AlphaGo would be no good at chess (or any other function).

The MICology low-code platform can snapshot data fields each time a human makes a decision. The datasets storing process data alongside the decision outcomes has proven very successful in formulating complex decision matrices that previously relied on human intervention. The important point is that the decision, in these cases, is no better than the human produced data.

Better analysis comes from a combination of data and actual outcomes. Insurance claims data usually involves real input data from various sources and actual settlement values (outcomes). Using AI to predict the outcome using the input data has proven to be very informative.

So, the limitations of current Real AI are:

- The construction of the algorithm/program must be undertaken by highly skilled humans.

- It is limited by available (dumb) hardware

- It is mathematical and is largely limited to mathematical prediction

- Each AI implementation has a limited, specific purpose.

- AI needs large amounts of clean training data.

There’s a saying: when all you have is a hammer, everything looks like a nail. AI currently seems to be the hammer and every problem under the sun the nail. We need to stop treating every problem like a digital nail; AI is not always the solution. We need to make more thoughtful decisions about when, why and how we use such technology and its limitations.

Minsky also said: “Speed is what distinguishes intelligence. No bird discovers how to fly: evolution used a trillion bird-years to ‘discover’ that – where merely hundreds of person-years sufficed”.

Technologies such as AI can help humans master complex issues more quickly. It can discover previously unknown algorithms, even see patterns in data and images that were not apparent to the human observer; however, currently there are limitations.